Vision Automates Jar Inspection - Adbro Controls Ltd.

Food & Beverages | Packaging & Logistics | Matching

A vision-based inspection system ensures that jars of food produce meet stringent quality control requirements.

Jars of foodstuffs must be inspected prior to shipment to ensure that they have an expiry date code printed on their lids, that the correct labels have been affixed and that their tamper proof seals are present.

In the past, food jars were inspected manually, a time consuming, expensive process that was prone to human error. To reduce the cost of manual inspection and improve efficiency, AarhusKarlshamn (AAK; Runcorn, England; www.aak.com) turned to Adbro Controls to develop an automated vision-based system specifically for the purpose of inspecting its products.

By reconfiguring the system under software control, it is capable of inspecting different shapes and sizes of jars containing food products such as mayonnaise, mustard and salad dressing.

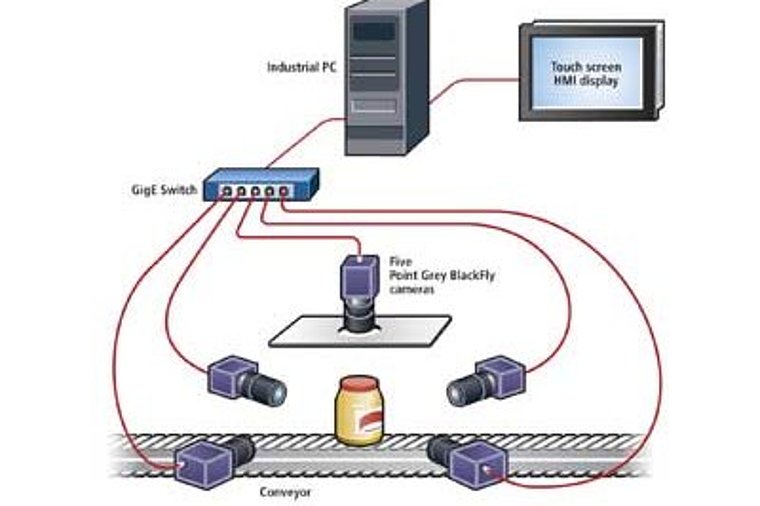

In operation, jars of specific types of food product are carried down a conveyor from Bosch Rexroth (St. Neots, England; www.boschrexroth.co.uk) into the vision system at a rate of 0.5m/s. Upon entering the system, a 07P200 07P-DPKG opto-reflective sensor from IFM Electronic (Hampton, England; www.ifm.com) is triggered. Using a DFS60 encoder from SICK (St Albans, England; www.sick.com) mounted on the conveyor, the position of the jar is then tracked until it reaches an optimum position inside the system. There, five cameras are triggered to capture a 360° view of the outside of the jar and an image of its lid (Figure 1).

Four of the five BFLY-PGE-13E4C Blackfly color cameras from Point Grey (Richmond, BC, Canada; www.ptgrey.com) fitted with 6mm focal length, CS mount lenses are mounted in the x/y plane, 300mm from the jar to capture four images of the outside of the jar. Each of the cameras is equipped with a polarizing filter to ensure that light emanating from white light LEDs will not saturate the imager in the camera directly opposite it.

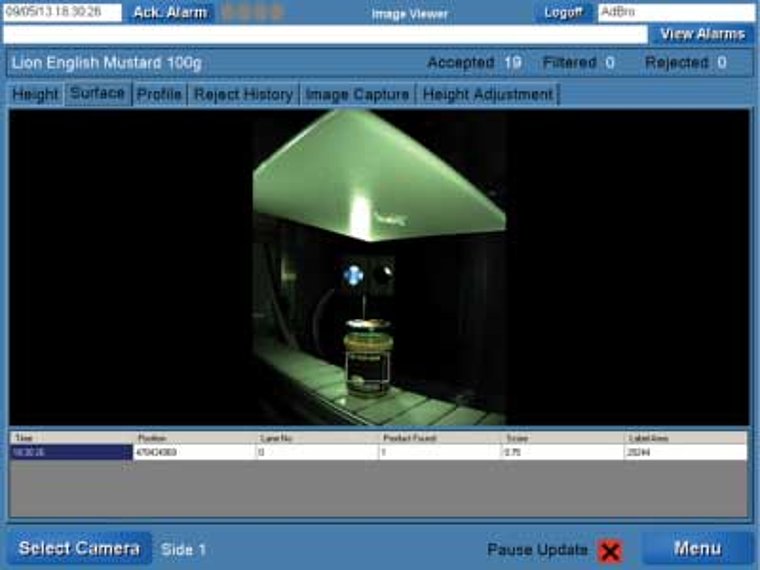

The fifth Blackfly camera is moved vertically under PC control to accommodate food jars of different heights. This camera captures an image of the top of the jar. The lens of the vertically mounted camera is mounted through an aperture cut into an opaque hard plastic sheet to provide an inexpensive alternative to a dome light. Light from the four LEDs in the x/y plane is reflected from the sheet to sufficiently illuminate the top of the jar in a diffused, uniform manner (Figure 2).

Once the system is triggered and the data captured by the cameras, data are transferred over GigE interfaces to a five port GigE switch from Moxa (Taipei, Taiwan; www.moxa.com) into an Intel multicore i7 PC where the five images are processed by the vision inspection software.

Software solution

Software components for the entire vision inspection system were developed using the C# object-oriented programming language. Working together, the components calculate the position of the jars along the conveyor, trigger the cameras to acquire images of the jars and calculate whether a faulty jar has been found. If so, a pneumatic rejection system is triggered to remove the jar from the line.

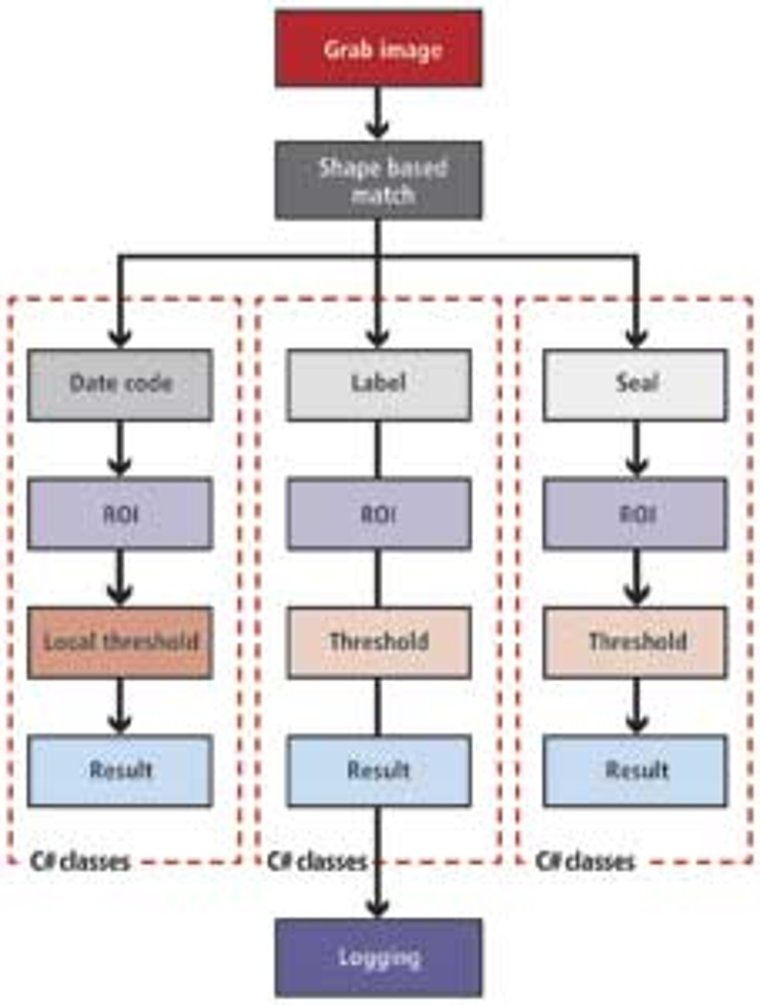

To identify the presence of the date code, label and seal, the image processing component of the system comprises different C# classes created for each inspection procedure. These classes call upon image processing routines from the HALCON Integrated Development Environment (IDE) from MVTec Software (Munich, Germany; www.mvtec.com) to perform specific sets of image processing routines (Figure 3).

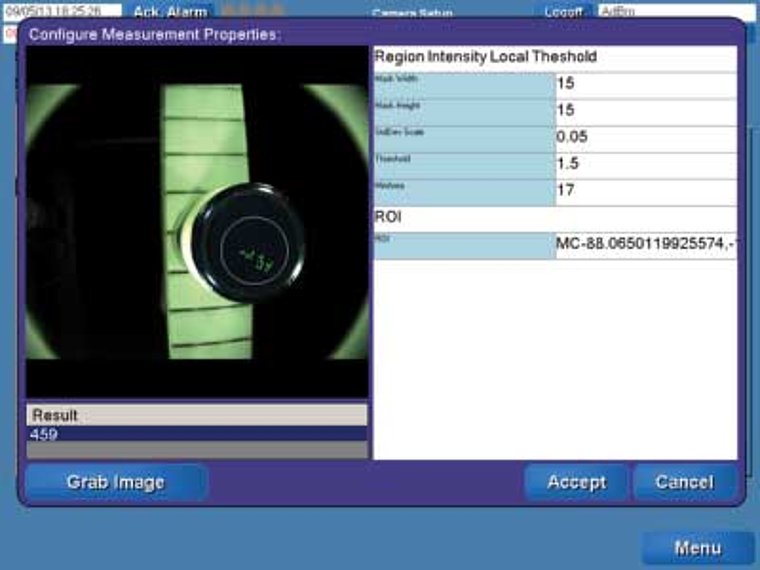

C# programming enabled the properties of the image processing routines associated with the specific operations within the classes - such as the color values of the threshold operations performed on the images - to be exposed to the user through an HMI interface component which was also written in C#. This enables them to be modified within a graphical user interface.

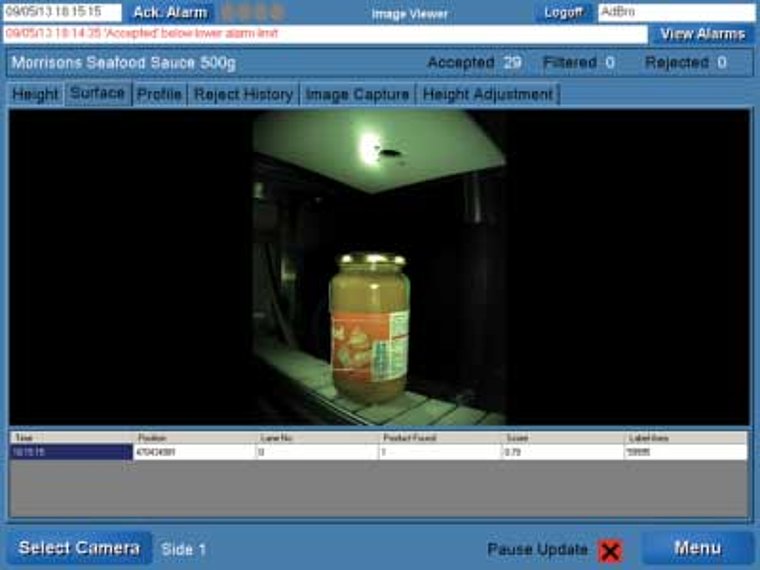

Before performing the appropriate image processing operations, the system is trained to locate a specific type of jar in an image. To do so, a jar is first placed inside the imaging station and its image captured. Then, a shape-based model is created of the curved region in the image from the top of its cap to its body. This shape-based model, unique to each product type, and the location of the label relative to it, is then saved to the system's database (Figure 4).

During a production run, the same shape is identified using a shape-based matching algorithm from the MVTec image processing library. The system can then locate the specific location of the jar in the image. From this data, the location of the region of interest in the image that represents the label can be derived.

During the set up process, the system is also taught a range of acceptable values for the color of the label. In a production run, the region in the image where a label should be present is then processed using a color thresholding algorithm to determine whether the color of the region falls within specified tolerances.

Although the color Blackfly cameras return RGB values, these are converted into HSI color space for processing. HSI color space was chosen as the color space closely matches how humans perceive color. It is usually easier to find a good threshold using HSI, though the system also supports setting thresholds in RGB color space.

The tamper proof seal of the jar is typically a colored tab affixed to its top and body. To identify whether it is present, the system also uses the shape-based matching technique to locate the position of the jar in the image. Having done so, the software identifies a region of interest where the seal is likely to be found. A color thresholding algorithm from the Halcon image processing library is then used to remove parts of the image that fall within a specified color range, enabling the presence of objects with color values outside that range - such as the seal - to be identified.

Just as the label and seal detection algorithms use shape-based matching to locate the position of the jar in the image, so too does the date code recognition software. From this data, the system identifies a specific region of interest on the lid of the jar in the image in which the expiry code is likely to fall. Having done so, a local color thresholding algorithm is applied to the image to extract any date code present from the image of the lid of the jar. The system can then determine whether the date code is present or absent (Figure 5).

Data from each camera are assigned their own thread for image processing with the OS is free to assign these threads to the cores of the processor as needed. Additionally, many of the algorithms in HALCON automatically support multiple cores.

Pneumatic ejection

After the vision system software has detected whether an expiry date code, a label or tamper proof seal is present or missing from a jar, the results from the three inspection processes are logged by another component of the C# system software. If any one of the three is missing, the system software uses the data from the opto-reflective sensor and encoder to determine when it should sequentially fire a set of pneumatic nozzles from SMC (Milton Keynes, England; www.smc.eu) to eject the defective product from the conveyor as it leaves the vision station.

If the vision system is triggered and the software fails to find any product in the images that are captured by the cameras, the system will automatically initiate the rejection sequence, assuming that a bad product had been detected.

To ensure that no faulty products are shipped to customers, the system uses a second IFM opto-reflective sensor which is triggered if a jar which has been identified as a reject by the vision system continues down the conveyor. An alarm is then sent to the operator to indicate that manual intervention is necessary to remove the product from the line.

Since the system has been taught to identify several different types of jars for defects through the use of specific shape based models that define the properties of each of the jars, it can be reconfigured by an operator through the HMI touch screen interface to inspect a variety of different product types.

In addition to identifying faulty jars of food produce, the software also maintains a statistical database that can highlight to a plant operator the type of rejects - either from missing labels, seals or date code - that are occurring. It also generates data on how many of the inspection parameters, such as the color of the labels, were within specification during a production run, and produces Excel spread sheet files to enable production personnel to visualize the statistical data from the system.

The system can also export images of the jars from a production run, enabling engineers working remotely to analyze the effectiveness of the system while in operation, and to perform any software modifications that may be required.

The system can also be expanded to carry out different inspections such as reading date codes by adding new measurement classes. Measurement classes can use the result of previous measurements in their calculations, so the region found in the local threshold measurement to identify the presence of a date code could be passed into an OCR measurement class for reading the code. At the present time, however, AAK is not considering this option.

Developed in two July/Augusts at a cost of less than $40,000, the Adbro vision-based inspection system was installed earlier this year at the AarhusKarlshamn food production facility in Runcorn in the UK where it is now successfully inspecting jars of food products at a rate of 150 jars per minute.

Author: Greig Lambourne

Article kindly provided by Vision Systems Design.

All product names, trademarks and images of the products/trademarks are copyright by their holders. All rights reserved.