To this end, HDevelop’s procedures provide you with performance indicators – numbers achieved with the current neural network model on the evaluation data set, the top-k error, precision and recall, and the (interactive!) confusion matrix.

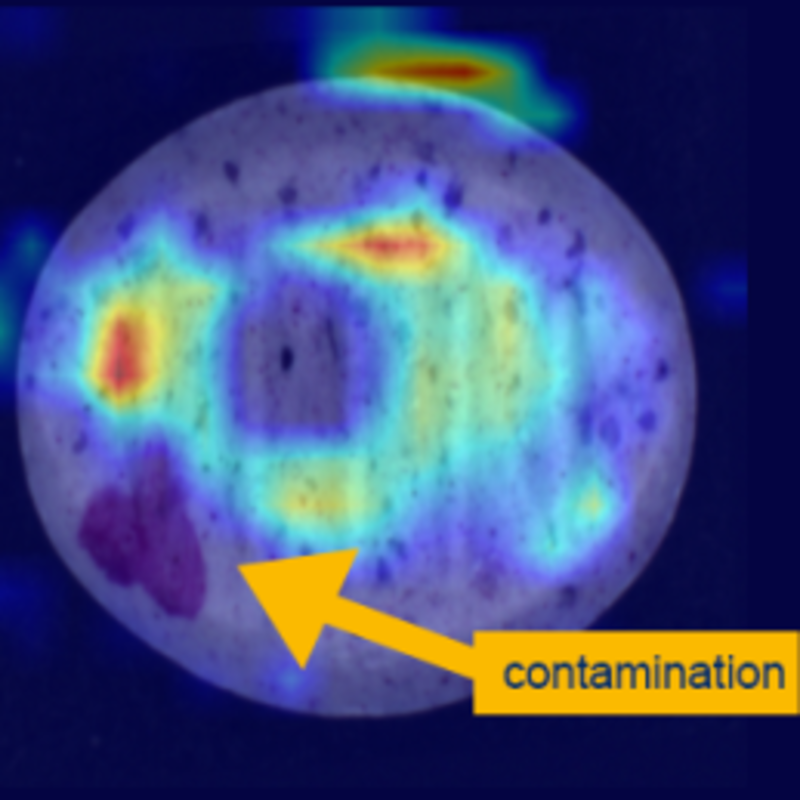

Besides those “dry” numbers HDevelop’s heatmap procedures help you to gain understanding about the model: For a given class the heatmap of a sample reveals with great spatial resolution how strongly various sample regions do activate - heat up - the model. You see instantaneously whether the learned features are reasonable or not.

Only the feature heatmap gives insights into the model.

In all current HALCON editions, a heatmap based on the Gradient-weighted Class Activation Mapping (Grad-CAM) algorithm [1] comes as an alternative to the confidence-based heatmap. It is almost instantaneous, much more robust, and does not require any parametrization.

For evaluation purposes, it is enough to look at the performance indicators plus to review some heatmaps of samples of every(!) class. As an evaluation method it is straight-forward and quick. If both indicators and heatmap are OK, you are done. If not, the heatmap will give you visual guiding back to the training imperfections. As in the following:

Just assume you have trained a fruit classification, where the indicators happen to be excellent. But a glance at the heatmap of an apple image shows that the activity is located outside the actual apple instance. It might show activity in a local background pattern that is constantly available in the apple image set, but never shows up in the image set representing pears.

Here the heatmap pinpoints the evidences for a training bias. This is the starting point for a reconsideration of the environmental conditions, illumination, the training parameters, etc.

It should be noted that the Grad-CAM heatmap is computed from different layers of the trained neural network, thus emphasizing local as well as semantic features. Because of this, the heatmap cannot be used as a basis for segmentation.

[1] This algorithm is based on a publication, which is quoted in the HALCON operator reference.

The heatmap on the left, for instance, is called from class contamination on two pill samples: