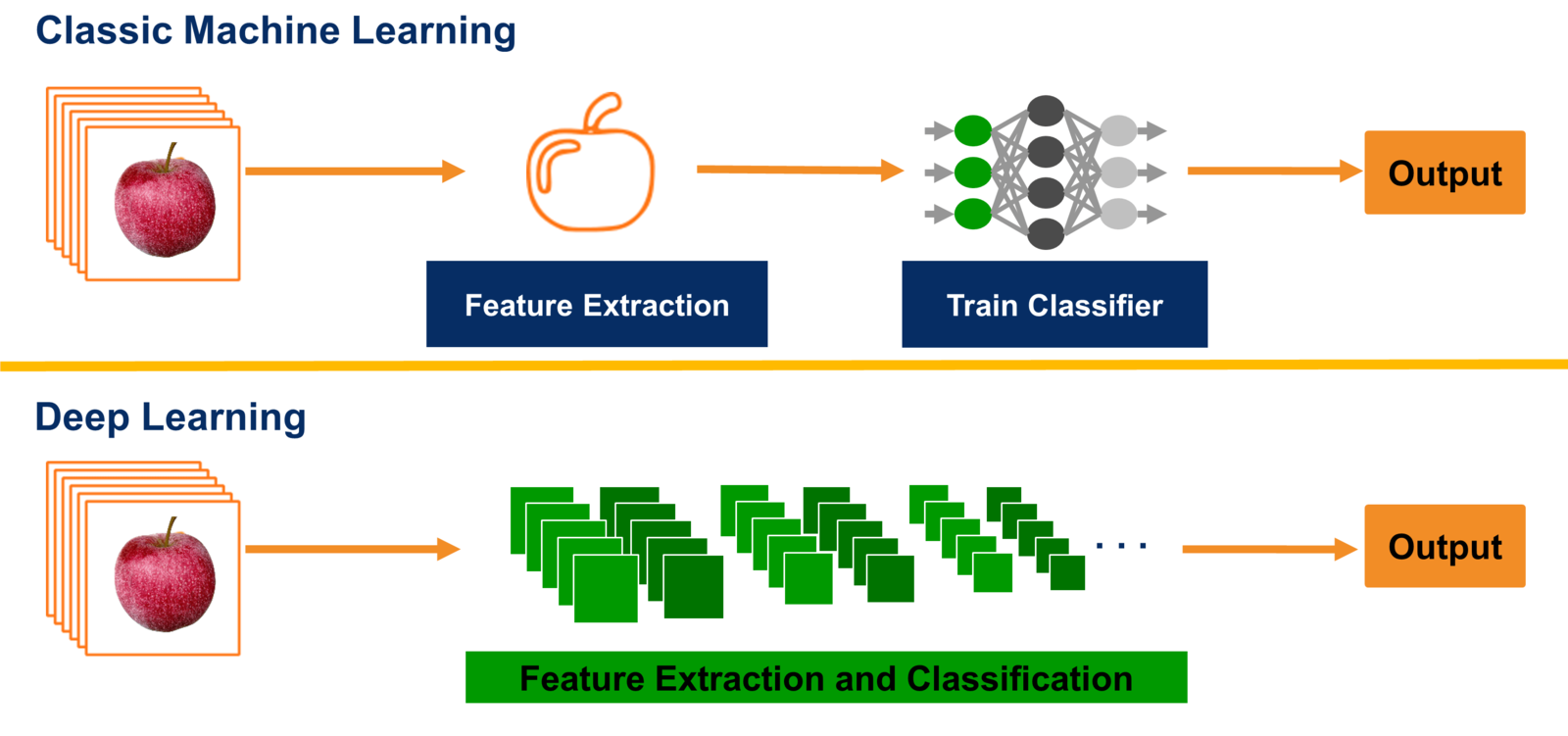

Classic machine vision vs. deep learning

When comparing deep learning with traditional machine vision methods, the biggest difference lies in the way feature extraction is performed.

With traditional methods, the vision engineer must decide which features to look for to detect a certain object in an image, and he must also select the correct set of features for each class. This quickly becomes cumbersome when the number of possible classes grows. Are you looking for color information? Edges? Texture? Depending on the number of features used, lots of parameters also have to be manually fine-tuned by the engineer.

In contrast, deep learning uses a concept of “end-to-end learning”. Here, the algorithm is simply told to learn what to look for with respect to each specific class. By analyzing sample images, it automatically works out the most prominent and descriptive features for each class/object.

Which method to choose?

Traditional methods and deep learning both have distinct areas where they excel. At the same time, these fields should not be treated as mutually exclusive. Many applications also benefit from combining traditional, rule-based approaches with deep learning components. Choosing between one of the two, or a mixture usually depends on the type of application at hand and the application’s characteristics. Also, the amount of available data as well as the available computing power needs to be considered.

| Deep LEarning | Traditional Methods | |

|---|---|---|

Typical applications |

|

|

Applications characteristics |

|

|